Pathogen detection from (direct Nanopore) sequencing data using Galaxy - Foodborne Edition

| Author(s) |

|

| Editor(s) |

|

OverviewQuestions:

Objectives:

What are the preprocessing steps to prepare ONT sequencing data for further analysis?

How to identify pathogens using sequencing data?

How to track the found pathogens through all your samples datasets?

Requirements:

Check quality reports generated by FastQC and NanoPlot for metagenomics Nanopore data

Preprocess the sequencing data to remove adapters, poor quality base content and host/contaminating reads

Perform taxonomy profiling indicating and visualizing up to species level in the samples

Identify pathogens based on the found virulence factor gene products via assembly, identify strains and indicate all antimicrobial resistance genes in samples

Identify pathogens via SNP calling and build the consensus gemone of the samples

Relate all samples’ pathogenic genes for tracking pathogens via phylogenetic trees and heatmaps

Time estimation: 4 hoursLevel: Introductory IntroductorySupporting Materials:Last modification: May 18, 2023License: Tutorial Content is licensed under Creative Commons Attribution 4.0 International License. The GTN Framework is licensed under MITShort Link: https://gxy.io/GTN:T00208

Introduction

Food contamination with pathogens is a major burden on our society. In the year 2019, foodborne pathogens caused 137 hospitalisations in Germany (BVL 2019). Globally, they affect an estimated 600 million people a year and impact socioeconomic development at different levels. These outbreaks are mainly due to Salmonella spp. followed by Campylobacter spp. and Noroviruses, as studied by the Food safety - World Health Organization (WHO).

During the investigation of a foodborne outbreak, a microbiological analysis of the potentially responsible food vehicle is performed in order to detect the responsible pathogens and identify the contamination source. By default, the European Regulation (EC) follows ISO standards to detect bacterial pathogens in food: pathogens are detected and identified by stepwise cultures on selective media and/or targeting specific genes with real-time PCRs. The current gold standard is Pulsed-field Gel Electrophoresis (PFGE) or Multiple-Locus Variable Number Tandem Repeat Analysis (MLVA) to characterize the detected strains. These techniques have some disadvantages.

Whole Genome Sequencing (WGS) has been proposed as an alternative. With just one sequencing run, we can:

- detect all genes

- run phylogenetic analysis to link cases

- get information on antimicrobial resistance genes, virulence, serotype, resistance to sanitizers, root cause, and other critical factors in one assay, including historical reference to pathogen emergence.

WGS is more than a surveillance tool and was recommended by the European Centre for Disease Prevention and Control (ECDC) and the European Food Safety Authority (EFSA) for surveillance and outbreak investigation. WGS still requires isolation of the targeted pathogen, which is a time-consuming process, the execution is not always straightforward, nor the success is guaranteed. Sequencing methods without prior isolation could solve this issue.

The evolution of sequencing techniques in the last decades has made the development of shotgun metagenomic sequencing possible, i.e. the direct sequencing of all DNA present in a sample. This approach gives an overview of the genomic composition of all cells in the sample, including the food source itself, the microbial community, and any possible pathogens and their complete genetic information without the need for prior isolation. Several studies have demonstrated the potential of shotgun metagenomics to identify and characterize pathogens and their functional characteristics (e.g. virulence genes) in naturally contaminated or purposefully spiked food samples.

The currently available studies used Illumina sequencing, generating short reads. Longer read lengths, generated by third-generation sequencing platforms such as Pacific Biosciences (PacBio) and Oxford Nanopore Technologies (ONT), make it easier and more practical to identify strains with fewer reads. MinION (from Oxford Nanopore) is a portable, real-time device for ONT sequencing. Several proof-of-principle studies have shown the utility of ONT long-read sequencing from metagenomic samples for pathogen identification (Ciuffreda et al. 2021).

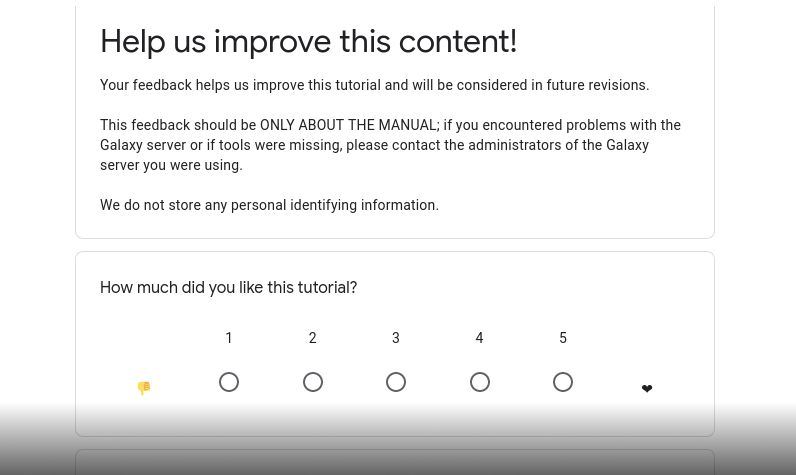

Comment: Nanopore sequencingNanopore sequencing has several properties that make it well-suited for our purposes

- Long-read sequencing technology offers simplified and less ambiguous genome assembly

- Long-read sequencing gives the ability to span repetitive genomic regions

- Long-read sequencing makes it possible to identify large structural variations

When using Oxford Nanopore Technologies (ONT) sequencing, the change in electrical current is measured over the membrane of a flow cell. When nucleotides pass the pores in the flow cell the current change is translated (basecalled) to nucleotides by a basecaller. A schematic overview is given in the picture above.

When sequencing using a MinIT or MinION Mk1C, the basecalling software is present on the devices. With basecalling the electrical signals are translated to bases (A,T,G,C) with a quality score per base. The sequenced DNA strand will be basecalled and this will form one read. Multiple reads will be stored in a fastq file.

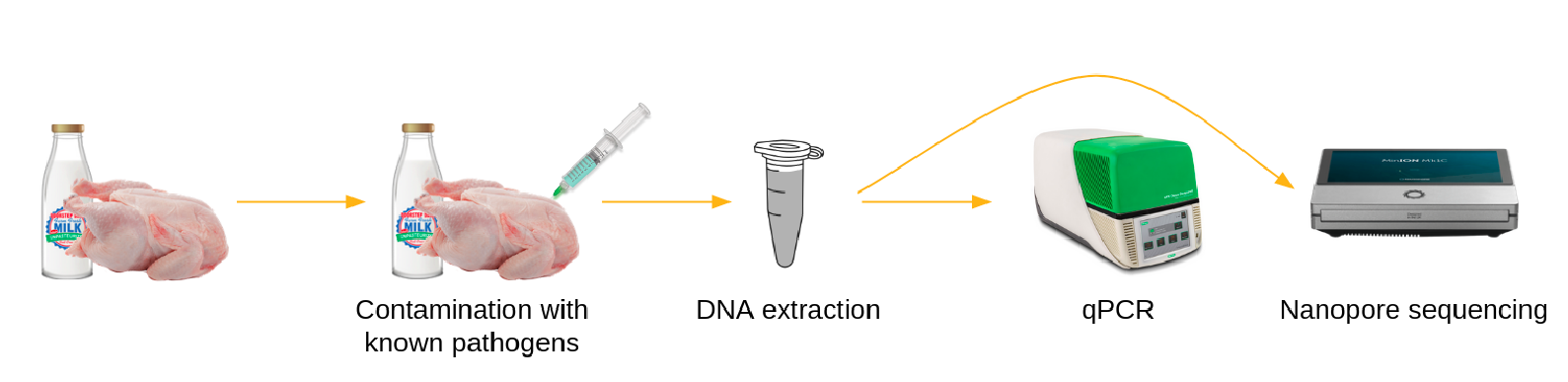

To identify and track foodborne pathogens using long-read metagenomic sequencing, different samples of potentially contaminated food (at different time points or different locations) are prepared, DNA is extracted and sequenced using MinION (ONT). The generated sequencing data then need to be processed using bioinformatics tools.

In this tutorial, we will be presenting a series of Galaxy workflows whose main goals are to:

- agnostically detect pathogens (What exactly is this pathogen and what virulence factors does it carry?) from data extracted directly (without prior cultivation) from a potentially contaminated sample (e.g. food like chicken, beef, etc.) and sequenced using Nanopore

- compare different samples to trace the possible source of contamination

To illustrate how to process such data, we will use datasets generated by Biolytix with the following approach:

Food samples, here chicken, are spiked with known pathogens, here:

- Salmonella enterica subsp. enterica in the sample named

Barcode 10 Spike 2 - Salmonella enterica subsp. houtenae in the sample named

Barcode 11 Spike 2b

DNA in the samples is extracted, analyzed with qPCR, and sequenced via Nanopore. We start the tutorial from raw data generated by Nanopore.

AgendaIn this tutorial, we will deal with:

Prepare Galaxy and data

Any analysis should get its own Galaxy history. So let’s start by creating a new one:

Hands-on: Data upload

Create a new history for this analysis

Click the new-history icon at the top of the history panel.

If the new-history is missing:

- Click on the galaxy-gear icon (History options) on the top of the history panel

- Select the option Create New from the menu

Rename the history

- Click on galaxy-pencil (Edit) next to the history name (which by default is “Unnamed history”)

- Type the new name

- Click on Save

If you do not have the galaxy-pencil (Edit) next to the history name:

- Click on Unnamed history (or the current name of the history) (Click to rename history) at the top of your history panel

- Type the new name

- Press Enter

Before we can begin any Galaxy analysis, we need to upload the input data: FASTQ files with the sequenced samples.

Hands-on: Import datasets

Import the following samples via link from Zenodo or Galaxy shared data libraries:

https://zenodo.org/record/7593928/files/Barcode10_Spike2.fastq.gz https://zenodo.org/record/7593928/files/Barcode11_Spike2b.fastq.gz

- Copy the link location

Open the Galaxy Upload Manager (galaxy-upload on the top-right of the tool panel)

- Select Paste/Fetch Data

Paste the link(s) into the text field

Press Start

- Close the window

As an alternative to uploading the data from a URL or your computer, the files may also have been made available from a shared data library:

- Go into Shared data (top panel) then Data libraries

- Navigate to the correct folder as indicated by your instructor

- Select the desired files

- Click on Add to History galaxy-dropdown near the top and select as Datasets from the dropdown menu

In the pop-up window, choose

- “Select history”: the history you want to import the data to (or create a new one)

- Click on Import

Add a tag to each dataset (one with

#Barcode10and the other#Barcode11)

- Click on the dataset

- Click on galaxy-tags Add Tags

Add a tag starting with

#Tags starting with

#will be automatically propagated to the outputs of tools using this dataset.- Check that the tag is appearing below the dataset name

In this tutorial, we can offer 2 versions:

- A short version, running prebuilt workflows

- A long version, going step-by-step

Hands-on: Choose Your Own TutorialThis is a "Choose Your Own Tutorial" section, where you can select between multiple paths. Click one of the buttons below to select how you want to follow the tutorial

Pre-Processing

Before starting any analysis, it is always a good idea to assess the quality of your input data and to discard poor quality base content by trimming and filtering reads.

In this section we will run a Galaxy workflow that performs the following tasks with the following tools:

- Assess the reads quality before and after preprocessing it using FastQC, NanoPlot and MultiQC (Ewels et al. 2016)

- Trimming and filtering reads by length and quality using Porechop and Fastp (Chen et al. 2018)

- Remove all possible hosts sequences e.g. chicken, cow, etc. using Kraken2 (Wood and Salzberg 2014) with the Kalamari database, and table manipulation tools, which are Filter Tabular (Johnson et al. 2018) and Filter Sequence By ID (Cock et al. 2013) to separate the sequences into host and non-host sequences before moving on, in the next section, with the non-host sequences.

We will run all these steps using a single workflow, then discuss each step and the results in more detail.

Hands-on: Pre-Processing

- Import the workflow into Galaxy

- Copy the URL (e.g. via right-click) of this workflow or download it to your computer.

- Import the workflow into Galaxy

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on galaxy-upload Import at the top-right of the screen

- Provide your workflow

- Option 1: Paste the URL of the workflow into the box labelled “Archived Workflow URL”

- Option 2: Upload the workflow file in the box labelled “Archived Workflow File”

- Click the Import workflow button

Run Workflow 1: Nanopore Datasets - Pre-Processing workflow using the following parameters

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on the workflow-run (Run workflow) button next to your workflow

- Configure the workflow as needed

- Click the Run Workflow button at the top-right of the screen

- You may have to refresh your history to see the queued jobs

param-files “1: Nanopore reads of a sample”: FastQ files

- Click on param-files Multiple datasets

- Select several files by keeping the Ctrl (or COMMAND) key pressed and clicking on the files of interest

“2: Host to Remove Specifier”:

^.*Gallus|Homo|Bos.*$We specify here the taxonomic names of the hosts so we can filter reads assigned to these hosts. To be generic, we remove here:

- Human (

Homo)- Chicken (

Gallus)- Beef (

Bos)If the contaminated food would be known to come from a different source and may, thus, include other host genomes, you could change the value here.

The workflow will take a little while to complete. Once tools have completed, the results will be available in your history for viewing. Note that only the most important outputs will be visible; intermediate files are hidden by default.

While you are waiting for the workflow to complete, please continue reading in the next section(s) where we will go into a bit more detail about what happens at each step of the workflow we launched and examine the results.

Quality Control and preprocessing

During sequencing, errors are introduced, such as incorrect nucleotides being called. These are due to the technical limitations of each sequencing platform. Sequencing errors might bias the analysis and can lead to a misinterpretation of the data. Sequence quality control is therefore an essential first step in your analysis.

In this tutorial we use similar tools as described in the tutorial “Quality control”, but more specific to Nanopore data:

- Quality control with

- FastQC generates a web report that will aid you in assessing the quality of your data

- NanoPlot plotting tool for long read sequencing data and alignments

Hands-on: Initial quality assessment- FastQC Tool: toolshed.g2.bx.psu.edu/repos/devteam/fastqc/fastqc/0.73+galaxy0 with the following parameters:

-

param-files “Raw read data from your current history”: both FastQ file

- Click on param-files Multiple datasets

- Select several files by keeping the Ctrl (or COMMAND) key pressed and clicking on the files of interest

-

- NanoPlot Tool: toolshed.g2.bx.psu.edu/repos/iuc/nanoplot/nanoplot/1.28.2+galaxy1 with the following parameters:

- “Select multifile mode”:

batch- “Type of the file(s) to work on”:

fastq- param-files “Data input files”: both FastQ file

- “Type of the file(s) to work on”:

CommentThis step, as it does not require the results of FastQC to run, can be launched even if FastQC is not ready

- “Select multifile mode”:

-

Read trimming and filtering with Porechop and Fastp (Chen et al. 2018)

Hands-on: Read trimming and filtering- Porechop Tool: toolshed.g2.bx.psu.edu/repos/iuc/porechop/porechop/0.2.4 with the following parameters:

- param-files “Input FASTA/FASTQ”: both FastQ file

- “Output format for the reads”:

fastq.gz

- fastp Tool: toolshed.g2.bx.psu.edu/repos/iuc/fastp/fastp/0.20.1+galaxy0 with the following parameters:

- “Single-end or paired reads”:

Single-end- param-files “Input 1”: outputs of Porechop tool

- In Output Options

- “Output JSON report”:

Yes

- “Output JSON report”:

CommentThis step can be launched even if Porechop is not done. It will be scheduled and wait until Porechop is done to start.

- “Single-end or paired reads”:

- Porechop Tool: toolshed.g2.bx.psu.edu/repos/iuc/porechop/porechop/0.2.4 with the following parameters:

-

Quality recheck after read trimming and filtering with FastQC and Nanoplot and report aggregation with MultiQC

Hands-on: Final quality checks- FastQC Tool: toolshed.g2.bx.psu.edu/repos/devteam/fastqc/fastqc/0.73+galaxy0 with the following parameters:

- param-files “Raw read data from your current history”: outputs of fastp tool

- NanoPlot Tool: toolshed.g2.bx.psu.edu/repos/iuc/nanoplot/nanoplot/1.28.2+galaxy1 with the following parameters:

- “Select multifile mode”:

batch- “Type of the file(s) to work on”:

fastq- param-files “files”: outputs of fastp tool

- “Type of the file(s) to work on”:

- “Select multifile mode”:

- MultiQC Tool: toolshed.g2.bx.psu.edu/repos/iuc/multiqc/multiqc/1.11+galaxy0 with the following parameters:

- In “Results”:

- param-repeat “Insert Results”

- “Which tool was used generate logs?”:

FastQC- In “FastQC output”:

- param-repeat “Insert FastQC output”

- “Type of FastQC output?”:

Raw data - param-files “FastQC output”: 4

Raw dataoutputs of FastQC tool

- “Type of FastQC output?”:

- param-repeat “Insert FastQC output”

- In “FastQC output”:

- “Which tool was used generate logs?”:

- param-repeat “Insert Results”

- “Which tool was used generate logs?”:

fastp- param-files “Output of fastp”:

JSON reportoutputs of fastp tool

- param-files “Output of fastp”:

- “Which tool was used generate logs?”:

- param-repeat “Insert Results”

- In “Results”:

- FastQC Tool: toolshed.g2.bx.psu.edu/repos/devteam/fastqc/fastqc/0.73+galaxy0 with the following parameters:

QuestionInspect the HTML output of MultiQC for

Barcode10

- How many sequences does

Barcode10contain before and after trimming?- What is the quality score over the reads before and after trimming? And the mean score?

- What is the importance of FastQC?

- Before trimming the file has 114,986 sequences and After trimming the file has 91,434 sequences

The “Per base sequence quality” is globally medium: the quality score stays above 20 over the entire length of reads after trimming, while quality below 20 could be seen before trimming specially at the beginning and the end of the reads.

- After checking what is wrong, e.g. before trimming, we should think about the errors reported by FastQC: they may come from the type of sequencing or what we sequenced (check the “Quality control” training: FastQC for more details). However, despite these challenges, we can already see sequences getting slightly better after the trimming and filtering, so now we can proceed with our analyses.

CommentFor more information about how to interpret the plots generated by FastQC and MultiQC, please see our dedicated “Quality control” Tutorial.

Host read filtering

Generally, we are not interested in the food (host) sequences, rather only those originating from the pathogen itself. It is an important to get rid of all host sequences and to only retain sequences that might include a pathogen, both in order to speed up further steps and to avoid host sequences compromising the analysis.

In this tutorial, we know the samples come from chicken meat spiked with Salmonella so we already know what will we get as the host and the main pathogen.

In this tutorial we use:

-

Assign reads to taxa using Kraken2 (Wood and Salzberg 2014) and Kalamari, a database of completed assemblies for metagenomics-related tasks used widely in contamination and host filtering

Hands-on: Read taxonomic classification for host filtering- Kraken2 Tool: toolshed.g2.bx.psu.edu/repos/iuc/kraken2/kraken2/2.1.1+galaxy1 with the following parameters:

- “Single or paired reads”:

Single- param-files “Input sequences”: outputs of fastp tool

- “Print scientific names instead of just taxids”:

Yes - In “Create Report”:

- “Print a report with aggregrate counts/clade to file”:

Yes - “Format report output like Kraken 1’s kraken-mpa-report”:

Yes - “Report counts for ALL taxa, even if counts are zero”:

Yes - “Report minimizer data”:

Yes

- “Print a report with aggregrate counts/clade to file”:

- “Select a Kraken2 database”:

kalamari

- “Single or paired reads”:

QuestionInspect the report of Kraken2 for

Barcode10- What is the species of the host?

- How many sequences of this host was found?

- Gallus gallus (taxid 9031), which is chicken

- 836

- Kraken2 Tool: toolshed.g2.bx.psu.edu/repos/iuc/kraken2/kraken2/2.1.1+galaxy1 with the following parameters:

-

Filter host assigned reads based on Kraken2 assignments

- Manipulate Kraken2 classification to extract the sequence ids of all hosts sequences identified with Kraken2

- Filter the FASTQ files to get 1 ouput with the host-assigned sequences and 1 output without the host-assigned reads

Hands-on: Host read filtering- Filter Tabular Tool: toolshed.g2.bx.psu.edu/repos/iuc/filter_tabular/filter_tabular/2.0.0 with the following parameters:

- param-files “Tabular Dataset to filter”:

Classificationoutputs of Kraken2 tool - In “Filter Tabular Input Lines”:

- param-repeat “Insert Filter Tabular Input Lines”

- “Filter By”:

by regex expression matching-

“regex pattern”:

^.*Gallus|Homo|Bos.*$We specify here the taxonomic names of the hosts so we can filter reads assigned to these hosts. To be generic, we remove here:

- Human (

Homo) - Chicken (

Gallus) - Beef (

Bos)

If the contaminated food comes from and may include other animals, you can change the value here.

- Human (

-

“action for regex match”:

include line if pattern found

-

- “Filter By”:

- param-repeat “Insert Filter Tabular Input Lines”

- param-files “Tabular Dataset to filter”:

- Filter sequences by ID Tool: toolshed.g2.bx.psu.edu/repos/peterjc/seq_filter_by_id/seq_filter_by_id/0.2.7 with the following parameters:

- param-files “Sequence file to be filtered”: outputs of fastp tool

- “Filter using the ID list from”:

tabular file- param-files “Tabular file containing sequence identifiers”: outputs of Filter Tabular tool

- “Column(s) containing sequence identifiers”:

Column: 2

- “Output positive matches, negative matches, or both?”:

Both positive matches (ID on list) and negative matches (ID not on list), as two files

CommentWe will need the outputs from this section in the next one. If yours is still running or you get an error you can go on and upload it so you can start the next workflow, the next hands-on is optional.

Hands-on: Optional Data upload

Import the quality processed samples fastqsanger files via link from Zenodo or the Shared Data library:

https://zenodo.org/record/7593928/files/Nanopore_processed_sequenced_reads_Barcode10_Spike2.fastqsanger https://zenodo.org/record/7593928/files/Nanopore_processed_sequenced_reads_Barcode11_Spike2b.fastqsangerAdd tags to the datasets

Taxonomy Profiling

In this section we would like to identify the different organisms found in our samples by assigning taxonomy levels to the reads starting from the kingdom level down to the species level and visualize the result. It’s important to check what might be the species of a possible pathogen to be found, it gets us closer to the investigation as well as discovering possible multiple food infections if any existed.

Taxonomy is the method used to naming, defining (circumscribing) and classifying groups of biological organisms based on shared characteristics such as morphological characteristics, phylogenetic characteristics, DNA data, etc. It is founded on the concept that the similarities descend from a common evolutionary ancestor.

Defined groups of organisms are known as taxa. Taxa are given a taxonomic rank and are aggregated into super groups of higher rank to create a taxonomic hierarchy. The taxonomic hierarchy includes eight levels: Domain, Kingdom, Phylum, Class, Order, Family, Genus and Species.

The classification system begins with 3 domains that encompass all living and extinct forms of life

- The Bacteria and Archae are mostly microscopic, but quite widespread.

- Domain Eukarya contains more complex organisms

When new species are found, they are assigned into taxa in the taxonomic hierarchy. For example for the cat:

Level Classification Domain Eukaryota Kingdom Animalia Phylum Chordata Class Mammalia Order Carnivora Family Felidae Genus Felis Species F. catus From this classification, one can generate a tree of life, also known as a phylogenetic tree. It is a rooted tree that describes the relationship of all life on earth. At the root sits the “last universal common ancestor” and the three main branches (in taxonomy also called domains) are bacteria, archaea and eukaryotes. Most important for this is the idea that all life on earth is derived from a common ancestor and therefore when comparing two species, you will -sooner or later- find a common ancestor for all of them.

Let’s explore taxonomy in the Tree of Life, using Lifemap

In the previous section we ran Kraken2 along with the Kalamari database, which is also a kind of taxonomy profiling but the database used is designed to include all possible host sequences. In the following part, we run Kraken2 again; but this time with one of its built-in databases, Standard PlusPF, which can give us more insight into pathogen candidate species than Kalamari. You can test this yourself by comparing reports of both Kraken2 runs.

In the \(k\)-mer approach for taxonomy classification, we use a database containing DNA sequences of genomes whose taxonomy we already know. On a computer, the genome sequences are broken into short pieces of length \(k\) (called \(k\)-mers), usually 30bp.

Kraken examines the \(k\)-mers within the query sequence, searches for them in the database, looks for where these are placed within the taxonomy tree inside the database, makes the classification with the most probable position, then maps \(k\)-mers to the lowest common ancestor (LCA) of all genomes known to contain the given \(k\)-mer.

Kraken2 uses a compact hash table, a probabilistic data structure that allows for faster queries and lower memory requirements. It applies a spaced seed mask of s spaces to the minimizer and calculates a compact hash code, which is then used as a search query in its compact hash table; the lowest common ancestor (LCA) taxon associated with the compact hash code is then assigned to the k-mer.

You can find more information about the Kraken2 algorithm in the paper Improved metagenomic analysis with Kraken 2.

Hands-on: Taxonomy Profiling and visualisation

- Import the workflow into Galaxy

- Copy the URL (e.g. via right-click) of this workflow or download it to your computer.

- Import the workflow into Galaxy

- Run Workflow 2: Nanopore Datasets - Taxonomy Profiling and Visualization workflow using the following parameters:

- “Send results to a new history”:

No- param-files “Nanopore preprocessed reads”:

Nanopore processed sequenced readsfiles for both tags- “Sample Metadata”: Leave empty

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on the workflow-run (Run workflow) button next to your workflow

- Configure the workflow as needed

- Click the Run Workflow button at the top-right of the screen

- You may have to refresh your history to see the queued jobs

To assign reads to taxons, we use Kraken2 with Standard PlusPF database.

Hands-on: Taxonomy Profiling

- Kraken2 Tool: toolshed.g2.bx.psu.edu/repos/iuc/kraken2/kraken2/2.1.1+galaxy1 with the following parameters:

- “Single or paired reads”:

Single

- param-files “Input sequences”: Outputs without matched ID of Filter sequences by ID tool

- In “Create Report”:

- “Print a report with aggregrate counts/clade to file”:

Yes- “Select a Kraken2 database”:

Prebuilt Refseq indexes: PlusPF (Standard plus protozoa and fungi) (Version: 2022-06-07 - Downloaded: 2022-09-04T165121Z)

QuestionInspect the Kraken2 report for

Barcode10tag

- What is the most commonly found species?

- What is the second most commonly found species?

- How many sequences are classified and how many are unclassified?

- What are the differences between Kraken2 tool’s report with Kalamari database and Kraken2 tool’s report with Standard PlusPF database regarding the previous 3 questions?

- Escherichia coli with 10,243 sequences

- Salmonella enterica with 7,458 sequences

- 40,143 sequences are classified and 50,455 are unclassified

- With Kalamari database the most found species is Escherichia coli with 12,577 sequences and the second most found species is Salmonella enterica with 10,632 sequences. The number of classified sequences are 32,020 sequences and the unclassified sequences are 59,414. In conclusion, both databases are able to show the same results of the most common species. However, the number of the classified sequences with Standard PlusPF database is higher than Kalamari database and it would be even higher since all chicken sequences were removed before testing the Standard PlusPF database.

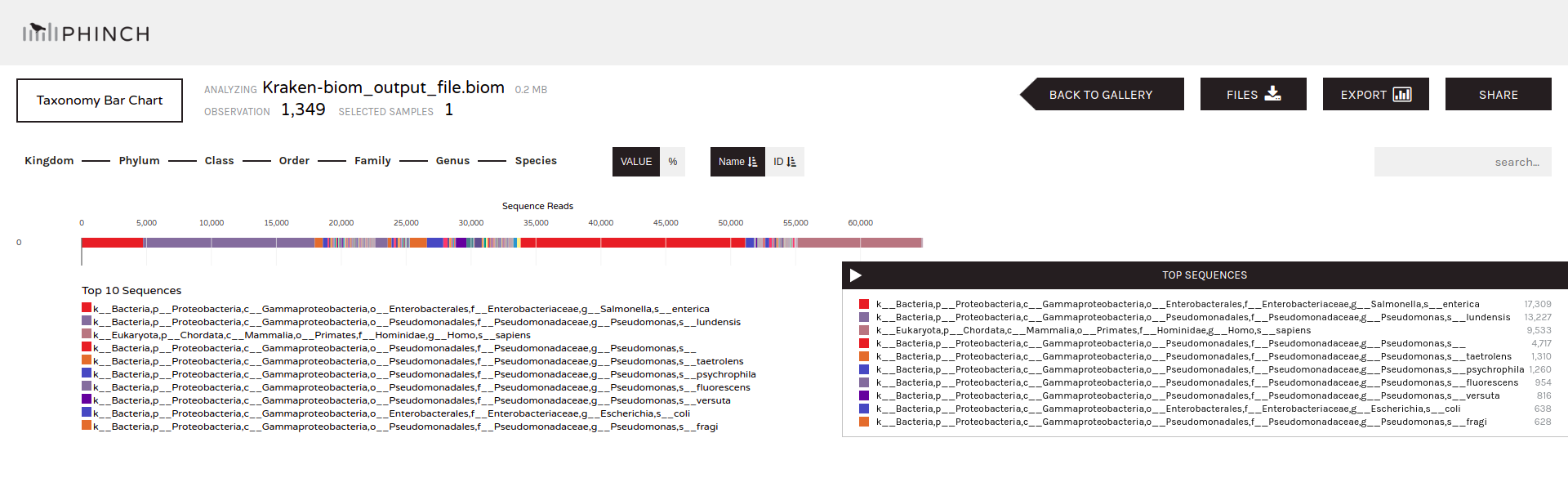

In order to view the taxonomy profiling produced by Kraken2 tool, there are a lot of tools to be used afterwards such as Krona pie chart, however too many species were detected to be shown by this tool. For that reason, we have chosen the Phinch visualization interactive tool as it contains multiple visualization plots, it is interactive alowing you to choose between different parameters, you can visualize each taxonomic level on its own, you can have the metadata of the samples represented along with the taxonomic visualization, download all plots for publications and a lot of other benefits. For later, you can check out Pavian tool as well it can replace Phinch visualization with similar outputs.

Phinch visualization needs a BIOM file format as an input, and for that we need the Kraken-Biom tool to convert the Kraken2 tabular output into a Biom file.

Hands-on: BIOM generation

- Kraken-biom Tool: toolshed.g2.bx.psu.edu/repos/iuc/kraken_biom/kraken_biom/1.2.0+galaxy1 with the following parameters:

- param-files “Input files to Kraken-biom: Kraken report output file(s)”: Report outputs of Kraken2 tool

- param-file “Sample metadata file”: Leave empty

- “Do you want to create an OTU IDs file”:

Yes- “Output Format”:

JSON

Once the BIOM file has been generated, we launch the interactive visualisation tool called Phinch visualization:

Hands-on: Visualisation with Phinch

- Phinch Visualisation Tool: interactive_tool_phinch with the following parameters:

- param-file “Biom1 dataset”: output of Kraken-biom tool

Now let’s now explore the Phinch visulization tool running for Barcode11 tag

Hands-on: Explore data interactively

Open Phinch interactive tool

- Go to User > Active InteractiveTools

- Wait for the Phinch visualization to be running (Job Info)

- Click on Phinch visualization

Click on Proceed to Gallery button on the top right of the opened webpage to see all the plots

Question

- What is the most commonly found species?

- What is the second most commonly found species?

- What’s your favorite visualization plot?

- Salmonella enterica with 17,309 sequences

- Pseudomonas lundensis with 13,227 sequences

All of them are good visualization of the data but for us to answer these questions, we used the Taxonomy Bar Chart

CommentWhile these steps are running, you can move on to the next section Gene based pathogenic identification and run the steps there, as well. Both analyses can execute in parallel.

Gene based pathogenic identification

With taxonomy profiling, we identified some bacterial species. But we want to be sure they are pathogenic, by looking for genes known to be linked to pathogenicity or to the pathogenecity character of the organim:

- Virulence Factor (VF): gene products, usually proteins, involved in pathogenicity. By identifiying them we can call a pathogen and its severity level

-

Antimicrobial Resistance genes (AMR).

These type of genes have three fundamental mechanisms of antimicrobial resistance that are enzymatic degradation of antibacterial drugs, alteration of bacterial proteins that are antimicrobial targets, and changes in membrane permeability to antibiotics, which will lead to not altering the target site and spread throughput the pathogenic bacteria decreasing the overall fitness of the host.

To look for these genes and determine the strain of the bacteria we are testing for pathogenicity we use Multilocus Sequence Typing approach and dedicated pubMLST datases database:

- Genome assembly to get contigs, i.e. longer sequences, using metaflye (Kolmogorov et al. 2020) then assembly polishing using medaka consensus pipeline and visualizing the assembly graph using Bandage Image (Wick et al. 2015)

- Generate an MLST report with MLST tool that scans genomes against PubMLST schemes

- Generate reports with AMR genes and VF using ABRicate

As outputs, we will get our FASTA and Tabular files to track genes and visualize our pathogenic identification. For that we will need one more file to create a report and we can upload it directly:

Hands-on: Data upload

Import a tabular file via link from Zenodo or shared data libraries

https://zenodo.org/record/7593928/files/MLST_Report_Header.tabularCheck that the datatype is

Tabular

Hands-on: Gene based Pathogenic Identification

- Import the workflow into Galaxy

- Copy the URL (e.g. via right-click) of this workflow or download it to your computer.

- Import the workflow into Galaxy

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on galaxy-upload Import at the top-right of the screen

- Provide your workflow

- Option 1: Paste the URL of the workflow into the box labelled “Archived Workflow URL”

- Option 2: Upload the workflow file in the box labelled “Archived Workflow File”

- Click the Import workflow button

- Run Workflow 3: Nanopore Datasets - Gene based Pathogenic Identification workflow using the following parameters:

- param-file “Nanopore Preprocessed reads”:

Nanopore processed sequenced readsforBarcode10- “Sample ID”:

Barcode10_Spike2- param-file “MLST Report Header”:

MLST Report with Header

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on the workflow-run (Run workflow) button next to your workflow

- Configure the workflow as needed

- Click the Run Workflow button at the top-right of the screen

- You may have to refresh your history to see the queued jobs

- Repeat step 2 and run the same workflow again for the second tag

- param-file “Nanopore Preprocessed reads”:

Nanopore processed sequenced readsforBarcode11- “Sample ID”:

Barcode11_Spike2b- param-file “MLST Report Header”:

MLST Report with Header

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on the workflow-run (Run workflow) button next to your workflow

- Configure the workflow as needed

- Click the Run Workflow button at the top-right of the screen

- You may have to refresh your history to see the queued jobs

Assembly

To identify VF or AMR genes, it is better to assemble reads into longer seuqences or contigs, that can be then used to search databases for the presence of any pathogenic gene:

-

Assembly of long-read metagenomic data using metaflye or Flye.

Hands-on: Assembly with Flye- Flye Tool: toolshed.g2.bx.psu.edu/repos/bgruening/flye/flye/2.9+galaxy0 with the following parameters:

-

param-file “Input reads”: Outputs without matched ID of Filter sequences by ID tool for

Barcode10CommentWe need to run Flye individually on each sample otherwise Flye runs by default a co-assembly mode, i.e. it combines read of both samples together before running the assembly.

- “Mode”:

Nanopore HQ (--nano-hq) - “Perform metagenomic assembly”:

Yes - “Reduced contig assembly coverage”:

Disable reduced coverage for initial disjointing assembly

-

- Flye Tool: toolshed.g2.bx.psu.edu/repos/bgruening/flye/flye/2.9+galaxy0 with the following parameters:

- param-file “Input reads”: Outputs without matched ID of Filter sequences by ID tool for

Barcode11 - “Mode”:

Nanopore HQ (--nano-hq) - “Perform metagenomic assembly”:

Yes - “Reduced contig assembly coverage”:

Disable reduced coverage for initial disjointing assembly

- param-file “Input reads”: Outputs without matched ID of Filter sequences by ID tool for

- Flye Tool: toolshed.g2.bx.psu.edu/repos/bgruening/flye/flye/2.9+galaxy0 with the following parameters:

-

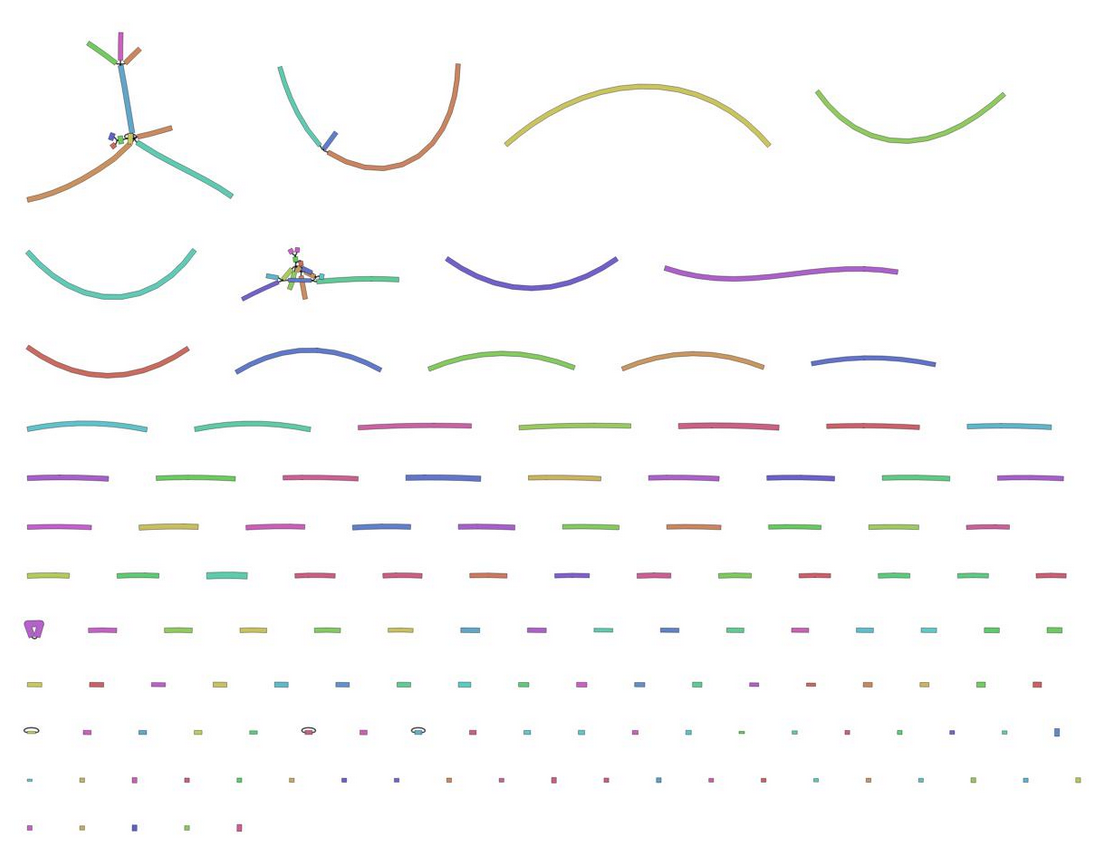

For the visualization of the assembly graph output from Flye we have chosen Bandage Image.

Hands-on: Visualization of the assembly grap- Bandage Image Tool: toolshed.g2.bx.psu.edu/repos/iuc/bandage/bandage_image/0.8.1+galaxy2 with the following parameters:

- param-files “Graphical Fragment Assembly”: Assembly graph outputs of Flye tool

- Bandage Image Tool: toolshed.g2.bx.psu.edu/repos/iuc/bandage/bandage_image/0.8.1+galaxy2 with the following parameters:

-

Contig polishing using medaka to correct the long, error-prone Nanopore reads

Hands-on: Contig polishing- medaka consensus pipeline Tool: toolshed.g2.bx.psu.edu/repos/iuc/medaka_consensus_pipeline/medaka_consensus_pipeline/1.7.2+galaxy0 with the following parameters:

- param-files “Select basecalls”: Outputs without matched ID of Filter sequences by ID tool

- param-files “Select assembly”: Output of Flye tool

- “Select model”:

r941_min_hac_g507 - “Select output file(s)”:

select all

To keep information about the provenance of the contigs, we can rename them to add contig information to them.

Hands-on: Contig renaming to add sample names- FASTA-to-Tabular Tool: toolshed.g2.bx.psu.edu/repos/devteam/fasta_to_tabular/fasta2tab/1.1.1 with the following parameters:

- param-files “Convert these sequences”: output of medaka consensus pipeline tool

- Replace Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_find_and_replace/1.1.4 with the following parameters:

- param-file “File to process”: output of FASTA-to-Tabular tool for

Barcode10 - In “Find and Replace”:

- param-repeat “Insert Find and Replace”

- “Find pattern”:

^(.+)$ - “Replace with”:

Barcode10_$1 - “Find-Pattern is a regular expression”:

Yes - “Replace all occurences of the pattern”:

Yes - “Find and Replace text in”:

specific column- “in column”:

Column: 1

- “in column”:

- “Find pattern”:

- param-repeat “Insert Find and Replace”

- param-file “File to process”: output of FASTA-to-Tabular tool for

-

Rename the output to

Contigs Table - Barcode10- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

- Replace Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_find_and_replace/1.1.4 with the following parameters:

- param-file “File to process”: output of FASTA-to-Tabular tool for

Barcode11 - In “Find and Replace”:

- param-repeat “Insert Find and Replace”

- “Find pattern”:

^(.+)$ - “Replace with”:

Barcode11_$1 - “Find-Pattern is a regular expression”:

Yes - “Replace all occurences of the pattern”:

Yes - “Find and Replace text in”:

specific column- “in column”:

Column: 1

- “in column”:

- “Find pattern”:

- param-repeat “Insert Find and Replace”

- param-file “File to process”: output of FASTA-to-Tabular tool for

-

Rename the output to

Contigs Table - Barcode11- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

- Tabular-to-FASTA Tool: toolshed.g2.bx.psu.edu/repos/devteam/tabular_to_fasta/tab2fasta/1.1.1 with the following parameters:

- param-files “Tab-delimited file”: outputs of Replace tool

- “Title column(s)”:

Column: 1 - “Sequence column”:

Column: 2

- Rename the outputs to

Contigs - Barcode10andContigs - Barcode11

- medaka consensus pipeline Tool: toolshed.g2.bx.psu.edu/repos/iuc/medaka_consensus_pipeline/medaka_consensus_pipeline/1.7.2+galaxy0 with the following parameters:

QuestionInspect Flye and Medaka consensus pipeline output results for

Barcode10

- How many different contigs did you get after Flye?

- How many were left after Medaka consensus pipeline, and what does that mean?

- What is the result of your Bandage Image?

- After Flye we have got 137 contigs

- After Medaka consensus pipeline all 137 contigs were kept, which means that the quality of the Flye run was high, and as a result the polishing did not remove any of the contigs.

The graph looks like:

Multilocus Sequence Typing

MLST tool is used to scan the pubMLST database against PubMLST typing schemes. It’s one of the analyses that you can perform on your dataset to determine the allele IDs, you can also detect novel alleles. This step is not essential to identify pathogens and track them in the remainder of this tutorial, however we wanted to show some of the analysis that one can use Galaxy in to understand more about the dataset, as well as identifying the strain that might be a pathogen or not.

Hands-on: Multilocus Sequence Typing

- MLST Tool: toolshed.g2.bx.psu.edu/repos/iuc/mlst/mlst/2.22.0 with the following parameters:

- param-files “input_files”: Sample Specific

Contigs-BarcodeXXFASTA files with contigs- “Specify advanced parameters”:

Yes, see full parameter list

- “Output novel alleles”:

Yes- “Automatically set MLST scheme”:

Automatic MLST scheme detection

The output file of the MLST tool is a tab-separated output file which contains:

- the filename

- the closest PubMLST scheme name

- the ST (sequence type)

- the allele IDs

QuestionInspect MLST tool results

- What is the the closest PubMLST typing scheme name detected by the tool for

Barcode11?

- senterica_achtman_2 (“Multilocus Sequence Typing as a Replacement for Serotyping in Salmonella enterica” 2012)

Antimicrobial Resistance Genes

Now, to search AMR genes among our samples’ contigs, we run ABRicate and choose the NCBI Bacterial Antimicrobial Resistance Gene Database (AMRFinderPlus) from the advanced options of the tool. The tool checks if there is an AMR found or not, if found then in which contig it is, its location on the contig, what the name of the exact product is, what substance it provides resistance against and a lot of other information regarding the found AMR.

Hands-on: Antimicrobial Resistance Genes Identification

- ABRicate Tool: toolshed.g2.bx.psu.edu/repos/iuc/abricate/abricate/1.0.1 with the following parameters:

- param-files “Input file (Fasta, Genbank or EMBL file)”: Sample Specific

Contigs-BarcodeXXFASTA files with contigs- In “Advanced options”:

- “Database to use - default is ‘resfinder’“:

NCBI Bacterial Antimicrobial Resistance Reference Gene Database- Rename the generated files

AMR - Barcode10andAMR - Barcode11

The outputs of ABRicate is a tabular file with different columns:

FILE: The filename this hit came fromSEQUENCE: The sequence in the filenameSTART: Start coordinate in the sequenceEND: End coordinateSTRAND: AMR geneGENE: AMR geneCOVERAGE: What proportion of the gene is in our sequenceCOVERAGE_MA: A visual represenationGAPS: Was there any gaps in the alignment - possible pseudogene?%COVERAGE: Proportion of gene covered%IDENTITY: Proportion of exact nucleotide matchesDATABASE: The database this sequence comes fromACCESSION: The genomic source of the sequencePRODUCTRESISTANCE

QuestionInspect ABRicate output files from

Barcode10andBarcode11tags

- How many AMR genes found in

Barcode10sample, what are they? Give more details about them.- How many AMR genes found in

Barcode11sample, what are they? Give more details about them.

- 5 AMR genes were found:

- Tet(C), which resists TETRACYCLINE. It was found in contig 158 from the position 1633 till 2808, with 100% coverage, so 100% of gene is covered in this contig.

- 2 genes with sulfonamide-resistant dihydropteroate synthase Sul1 products

- 2 genes with oxacillin-hydrolyzing class D beta-lactamase OXA-2 products

- No AMR genes were found by the database in

Barcode11_Spike2bsample.

Virulence Factor identification

In this step we return back to the main goal of the tutorial where we want to identify the pathogens: identify if the bacteria found in our samples are pathogenic bacteria or not. One of the ways to do that is to identify if the sequences include genes with a Virulence Facor or not, such that if the samples include gene(s) with a Virulence Factor then it for sure is a pathogen.

Comment: DefinitionsBacterial Pathogen: A bacterial pathogen is usually defined as any bacterium that has the capacity to cause disease. Its ability to cause disease is called pathogenicity.

Virulence: Virulence provides a quantitative measure of the pathogenicity or the likelihood of causing a disease.

Virulence Factors: Virulence factors refer to the properties (i.e., gene products) that enable a microorganism to establish itself on or within a host of a particular species and enhance its potential to cause disease. Virulence factors include bacterial toxins, cell surface proteins that mediate bacterial attachment, cell surface carbohydrates and proteins that protect a bacterium, and hydrolytic enzymes that may contribute to the pathogenicity of the bacterium.

To identifly VFs, we use again ABRicate but this time with the VFDB from the advanced options of the tool.

Hands-on: Virulence Factor identification

- ABRicate Tool: toolshed.g2.bx.psu.edu/repos/iuc/abricate/abricate/1.0.1 with the following parameters:

- param-files “Input file (Fasta, Genbank or EMBL file)”: Sample Specific

Contigs-BarcodeXXFASTA files with contigs- In “Advanced options”:

- “Database to use - default is ‘resfinder’“:

VFDB- Rename the generated files

VFs - Barcode10andVFs - Barcode11

QuestionInspect VFs of genes Identified by VFDB output file from

Barcode10andBarcode11tags

- How many different VFs gene products were found in

Barcode10_Spike2sample?- How many different VFs gene products were found in

Barcode11_Spike2bsample?

- 123

- 97

Hands-on: Formatting

- Cut Tool: Cut1 with the following parameters:

- “Cut columns”:

c13- param-files “From”: outputs of ABRicate tool

Rename the outputs

VFs accessions - Barcode10andVFs accessions - Barcode11- Add line to file Tool: toolshed.g2.bx.psu.edu/repos/bgruening/add_line_to_file/add_line_to_file/0.1.0 with the following parameters:

- “text to add”:

Barcode10- param-file “input file”:

VFs accessions - Barcode10Rename the output

VFs accessions with SampleID as a header- Barcode10- Add line to file Tool: toolshed.g2.bx.psu.edu/repos/bgruening/add_line_to_file/add_line_to_file/0.1.0 with the following parameters:

- “text to add”:

Barcode11- param-file “input file”:

VFs accessions - Barcode11- Rename the output

VFs accessions with SampleID as a header- Barcode11

SNP based pathogenic identification

Now we would like to identify pathogens with a third approach based on variant and single nucleotide polymorphisms (SNPs) calling: comparison of reads to a targeted reference genome, and call the differences between sample reads and reference genomes to identify variants.

For example, if we want to check whether our samples include Campylobacter pathogenic strains or not, we will map our samples against the reference genome of the Campylobacter species. Variants in specific positions on the genome are queried to judge if these variations would indicate a pathogen or not.

This approach also allows identification of novel alleles and possible new variants of the pathogen.

Using this approach, we also build the consensus genome of each sample, so we can later build a phylogenetic tree of all samples’ full genomes and have an insight into events that occurred during the evolution of same or different species in the samples.

In this training, we are testing Salmonella enterica, with different strains of which our samples were spiked. So we will now upload to our history the reference genome of S. enterica we originally obtained from the National Center for Biotechnology Information (NCBI) database.

Hands-on: Data upload

Import a reference genome FASTA file via link from Zenodo or Galaxy shared data libraries

https://zenodo.org/record/7593928/files/Salmonella_Ref_genome.fna.gz

Hands-on: SNP based Pathogenic Identification

- Import the workflow into Galaxy

- Copy the URL (e.g. via right-click) of this workflow or download it to your computer.

- Import the workflow into Galaxy

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on galaxy-upload Import at the top-right of the screen

- Provide your workflow

- Option 1: Paste the URL of the workflow into the box labelled “Archived Workflow URL”

- Option 2: Upload the workflow file in the box labelled “Archived Workflow File”

- Click the Import workflow button

- Run Workflow 4: Nanopore Datasets - SNP based Pathogenic Identification workflow using the following parameters:

- “Send results to a new history”:

No- param-files “Nanopore Preprocessed reads”:

Nanopore processed sequenced reads- param-file “Reference Genome of Tested Strain/Pathogen”:

Salmonella_Ref_genome.fna.gz

Variant Calling or SNP Calling

To identify variants, we

-

Map reads to the reference genome of the species of the pathogen we want to test our samples against using Minimap2 (Li 2017) as our datasets are from a Nanopore:

Hands-on: Mapping to reference genome- Map with minimap2 Tool: toolshed.g2.bx.psu.edu/repos/iuc/minimap2/minimap2/2.24+galaxy0 with the following parameters:

- “Will you select a reference genome from your history or use a built-in index?”:

Use a genome from history and build index- param-file “Use the following dataset as the reference sequence”:

Salmonella_Ref_genome.fna.gz

- param-file “Use the following dataset as the reference sequence”:

- “Single or Paired-end reads”:

Single- param-files “Select fastq dataset”: Outputs without matched ID of Filter sequences by ID tool for

Barcode10andBarcode11

- param-files “Select fastq dataset”: Outputs without matched ID of Filter sequences by ID tool for

- “Will you select a reference genome from your history or use a built-in index?”:

- Map with minimap2 Tool: toolshed.g2.bx.psu.edu/repos/iuc/minimap2/minimap2/2.24+galaxy0 with the following parameters:

-

Identify variants and single nucleotide variants using Clair3 (Zheng et al. 2021), which is designed specifically for Nanopore datasets and giving better results than other variant calling tools, as well as being new and up-to-date.

CommentMedaka consensus tool and medaka variant tool can be also used instead of Clair3, they give similar results but they are much slower then Clair3 and offer fewer options.

Hands-on: Variant or SNP Calling- Clair3 Tool: toolshed.g2.bx.psu.edu/repos/iuc/clair3/clair3/0.1.12+galaxy0 with the following parameters:

- param-files “BAM/CRAM file input”: Outputs of Map with minimap2 tool

- “Reference genome source”:

History- param-file “Reference genome”:

Salmonella_Ref_genome.fna.gz

- param-file “Reference genome”:

- “Clair3 model”:

Built-in- “Built-in model”:

r941_prom_hac_g360+g422

- “Built-in model”:

- In “Advanced parameters”:

- “Call with the following ploidy model”:

haploid precise (--haploid_precise)

- “Call with the following ploidy model”:

- Clair3 Tool: toolshed.g2.bx.psu.edu/repos/iuc/clair3/clair3/0.1.12+galaxy0 with the following parameters:

-

Left-align and normalize indels using bcftools norm (Li et al. 2009)

This step:

- checks REF alleles in the output match the reference;

- splits multiallelic sites into multiple rows;

- recovers multiallelics from multiple rows

Hands-on: Left-align and normalize indels- bcftools norm Tool: toolshed.g2.bx.psu.edu/repos/iuc/bcftools_norm/bcftools_norm/1.9+galaxy1 with the following parameters:

- param-files “VCF/BCF Data”: Outputs of Clair3 tool

- “Choose the source for the reference genome”:

Use a genome from the history- param-file “Reference genome”:

Salmonella_Ref_genome.fna.gz

- param-file “Reference genome”:

- “output_type”:

uncompressed VCF

-

Filter variants to keep only the pass and good quality variants using SnpSift Filter (Cingolani et al. 2012)

CommentLoFreq filter can be also used instead, both tools performs equal and fast results.

Hands-on: Filter variants- SnpSift Filter Tool: toolshed.g2.bx.psu.edu/repos/iuc/snpsift/snpSift_filter/4.3+t.galaxy1 with the following parameters:

- param-files “Input variant list in VCF format”: Outputs of bcftools norm tool

- “Type of filter expression”:

Simple expression- “Filter criteria”:

(QUAL > 2)

- “Filter criteria”:

- “Filter mode”:

Retain selected variants, remove others

The output is a VCF file. VCF is the standard file format for storing variation data, with different columns:

#CHROM: ChromosomePOS: Co-ordinate - The start coordinate of the variant.ID: IdentifierREF: Reference allele - The reference allele is whatever is found in the reference genome. It is not necessarily the major allele.ALT: Alternative allele - The alternative allele is the allele found in the sample you are studying.QUAL: Score - Quality score out of 100.FILTER: Pass/fail - If it passed quality filters.INFO: Further information - Allows you to provide further information on the variants. Keys in the INFO field can be defined in header lines above thetable.FORMAT: Information about the following columns - The GT in the FORMAT column tells us to expect genotypes in the following columns.Individual identifier (optional)- The previous column told us to expect to see genotypes here. The genotype is in the form “0|1”, where 0 indicates the reference allele and 1 indicates the alternative allele, i.e it is heterozygous. The vertical pipe “|” indicates that the genotype is phased, and is used to indicate which chromosome the alleles are on. If this is a slash (/) rather than a vertical pipe, it means we don’t know which chromosome they are on.

- SnpSift Filter Tool: toolshed.g2.bx.psu.edu/repos/iuc/snpsift/snpSift_filter/4.3+t.galaxy1 with the following parameters:

-

Extract a tabular report with Chromosome, Position, Identifier, Reference allele, Alternative allele and Filter from the VCF files using SnpSift Extract Fields

Hands-on: Extract a tabular report- SnpSift Extract Fields Tool: toolshed.g2.bx.psu.edu/repos/iuc/snpsift/snpSift_extractFields/4.3+t.galaxy0 with the following parameters:

- param-files “Variant input file in VCF format”: Outputs of SnpSift Filter

- “Fields to extract”:

CHROM POS ID REF ALT FILTER

- SnpSift Extract Fields Tool: toolshed.g2.bx.psu.edu/repos/iuc/snpsift/snpSift_extractFields/4.3+t.galaxy0 with the following parameters:

QuestionNow let’s inspect the outputs for

Barcode10tag:

- How many variants were found by Clair3?

- How many variants were found after quality filtering?

- What is the Strain identified by the NCBI for the sample?

- Before filtering: 2,652

- After filtering 2,489

- Strain LT2, can be inferred searching the Chroms. NCBI Reference Sequence: NC_003197.2

Consensus Genome Building

For further anaylsis we have included one more step in this section, where we build the full genome of our samples.

This consensus genome can be used later to compare and relate samples together based on their full genome. In cases such as SARS-CoV2, it is important to do so in order to discover new outbreaks. In this example of the training, it is not really important to draw a tree of the full genomes of the samples as Salmonella does not have such a speedy outbreak as SARS-CoV2 does. However, we decided to include it in the workflow for any further analysis of the users, if needed.

For this step we run bcftools consensus (Li et al. 2009).

Hands-on: Consensus Genome Building

- bcftools consensus Tool: toolshed.g2.bx.psu.edu/repos/iuc/bcftools_consensus/bcftools_consensus/1.15.1+galaxy3 with the following parameters:

- param-files “VCF/BCF Data”: Outputs of SnpSift Filter tool

- “Choose the source for the reference genome”:

Use a genome from the history

- param-file “Reference genome”:

Salmonella_Ref_genome.fna.gz

QuestionInspect the bcftools consensus output for

Barcode11tag

- How many sequences did we get for the sample? What are they?

- Why?

- We got 2 sequences: the complete genome and the complete plasmid genome.

- The tool uses the reference genome and the variants found to build the consensus genome of the sample, and the reference genome FASTA file we use includes two sequences a complete one and a plasmid complete one. So the tool constructed both sequences for us to choose from, based on our further analysis.

Pathogen Tracking among all samples

CommentIf you did not get your Gene based pathogenic identification section output files needed yet or you got an error for some reason, you can go on and download them all or the ones missing from Zenodo so you can start this workflow, please don’t forget to create the collections for them as explained in the pervious hands-on.

Hands-on: Optional Data upload

Import all tabular and FASTA files needed for this section via link from Zenodo to the new created history:

https://zenodo.org/record/7593928/files/VFs_Barcode10.tabular https://zenodo.org/record/7593928/files/VFs_Barcode11.tabular https://zenodo.org/record/7593928/files/VFs_accessions_with_SampleID_Barcode10.tabular https://zenodo.org/record/7593928/files/VFs_accessions_with_SampleID_Barcode11.tabular https://zenodo.org/record/7593928/files/VFs_accessions_Barcode10.tabular https://zenodo.org/record/7593928/files/VFs_accessions_Barcode11.tabular https://zenodo.org/record/7593928/files/Contigs_Barcode10.fasta https://zenodo.org/record/7593928/files/Contigs_Barcode11.fasta

- Copy the link location

Open the Galaxy Upload Manager (galaxy-upload on the top-right of the tool panel)

- Select Paste/Fetch Data

Paste the link(s) into the text field

Press Start

- Close the window

In this last section, we would like to show how to aggregate results and use the results to help tracking pathogenes among samples by:

- Drawing a presence-absence heatmap of the identified VF genes within all samples to visualize in which samples these genes can be found.

- Drawing a phylogenetic tree for each pathogenic gene detected, where we will relate the contigs of the samples together where this gene is found.

With these two types of visualizations we can have an overview of all samples and the genes, but also how samples are related to each other, which common pathogenic genes they share. Given the time of the sampling and the location one can easily identify using these graphs, where and when the contamination has occurred among the different samples.

Hands-on: Organize data

Create a collection named

VFswithVFsfiles for both tags

- Click on galaxy-selector Select Items at the top of the history panel

- Check all the datasets in your history you would like to include

Click n of N selected and choose Build Dataset List

- Enter a name for your collection

- Click Create List to build your collection

- Click on the checkmark icon at the top of your history again

Create a collection named

VFs accessionswithVFs accessionsfiles for both tagsCreate a collection named

VFs accessions with SampleIDwithVFs accessions with SampleIDfilesCreate a collection named

ContigswithContigsfiles

Hands-on: All Samples Analysis

- Import the workflow into Galaxy

- Copy the URL (e.g. via right-click) of this workflow or download it to your computer.

- Import the workflow into Galaxy

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on galaxy-upload Import at the top-right of the screen

- Provide your workflow

- Option 1: Paste the URL of the workflow into the box labelled “Archived Workflow URL”

- Option 2: Upload the workflow file in the box labelled “Archived Workflow File”

- Click the Import workflow button

- Run Workflow 5: Nanopore Datasets - Reports of All Samples along with Full genomes and VF genes Phylogenetic trees workflow using the following parameters:

- “Send results to a new history”:

No- param-collection “Contigs”:

Contigs- param-collection “VFs”:

VFs- param-collection “VFs accessions with SampleID”:

VFs accessions with SampleID- param-collection “VFs accessions”:

VFs accessions

- Click on Workflow on the top menu bar of Galaxy. You will see a list of all your workflows.

- Click on the workflow-run (Run workflow) button next to your workflow

- Configure the workflow as needed

- Click the Run Workflow button at the top-right of the screen

- You may have to refresh your history to see the queued jobs

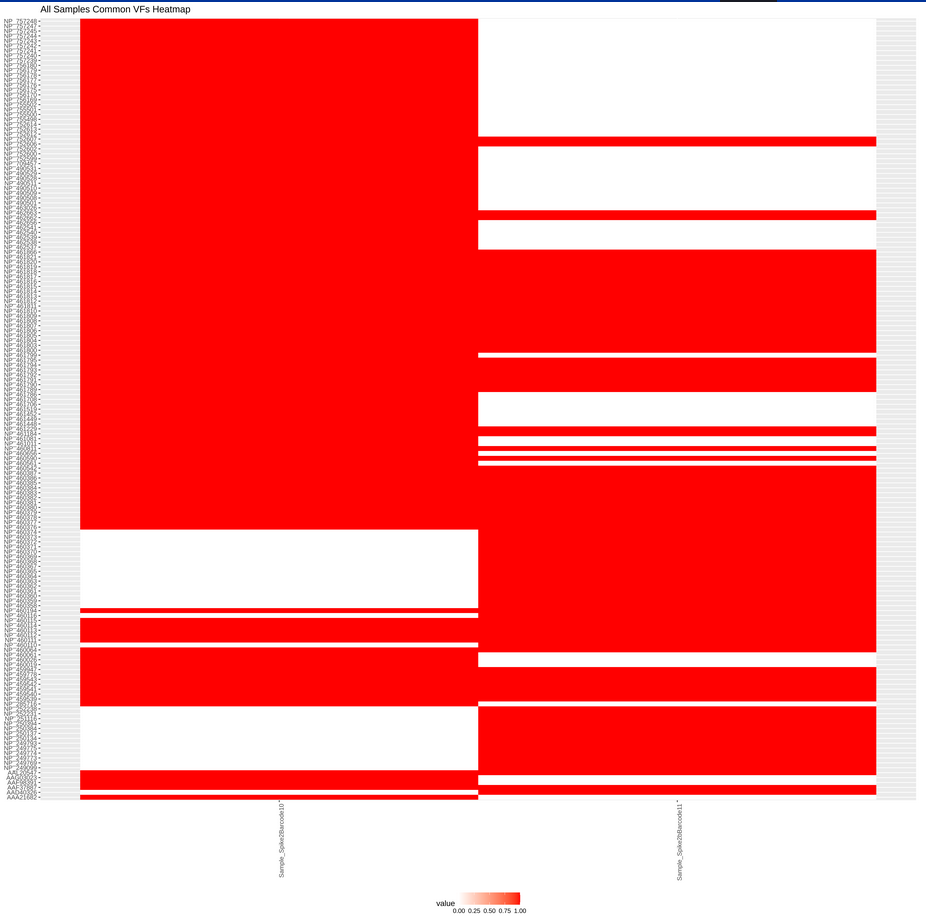

Heatmap

A heatmap is one of the visualization techniques that can give you a complete overview of all the samples together and whether or not a certain value exists. In this tutorial, we use the heatmap to visualize all samples aside and check which common bacteria pathogen genes are found in samples and which is only found in one of them.

We use Heatmap w ggplot tool along with other tabular manipulating tools to prepare the tabular files.

-

Combine VFs accessions for samples into a table and get 0 or 1 for absence / presence

Hands-on: Heatmap- Multi-Join Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_multijoin_tool/1.1.1 with the following parameters:

- param-file “File to join”:

VFs accessions with SampleID - Barcode10 - param-file “add additional file”:

VFs accessions with SampleID - Barcode11 - “Common key column”:

1 - “Column with values to preserve”:

Column: 1 - “Add header line to the output file”:

Yes - “Input files contain a header line (as first line)”:

Yes - “Ignore duplicated keys”:

Yes

- param-file “File to join”:

- Replace Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_find_and_replace/1.1.4 with the following parameters:

- param-file “File to process”: output of Multi-Join tool

- In “Find and Replace”:

- param-repeat “Insert Find and Replace”

- “Find pattern”:

^[^0]\w+ - “Replace with”:

1 - “Find-Pattern is a regular expression”:

Yes - “Replace all occurences of the pattern”:

Yes - “Ignore first line”:

Yes - “Find and Replace text in”:

specific column- “in column”:

Column: 2

- “in column”:

- “Find pattern”:

- param-repeat “Insert Find and Replace”

- Replace Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_find_and_replace/1.1.4 with the following parameters:

- param-file “File to process”: output of Replace tool

- In “Find and Replace”:

- param-repeat “Insert Find and Replace”

- “Find pattern”:

^[^0]\w+ - “Replace with”:

1 - “Find-Pattern is a regular expression”:

Yes - “Replace all occurences of the pattern”:

Yes - “Ignore first line”:

Yes - “Find and Replace text in”:

specific column- “in column”:

Column: 3

- “in column”:

- “Find pattern”:

- param-repeat “Insert Find and Replace”

- Multi-Join Tool: toolshed.g2.bx.psu.edu/repos/bgruening/text_processing/tp_multijoin_tool/1.1.1 with the following parameters:

-

Draw heatmap

Hands-on: Heatmap- Transpose Tool: toolshed.g2.bx.psu.edu/repos/iuc/datamash_transpose/datamash_transpose/1.1.0+galaxy2 with the following parameters:

- param-file “Input tabular dataset”: output of Replace tool

- Heatmap w ggplot Tool: toolshed.g2.bx.psu.edu/repos/iuc/ggplot2_heatmap/ggplot2_heatmap/3.4.0+galaxy0 with the following parameters:

- param-file “Select table”: output of Transpose tool

- “Select input dataset options”:

Dataset with header and row names- “Select column, for row names”:

Column: 1 - “Sample names orientation”:

horizontal

- “Select column, for row names”:

- “Plot title”:

All Samples Common VFs Heatmap - In “Output Options”:

- “width of output”:

15.0 - “height of output”:

17.0

- “width of output”:

- Transpose Tool: toolshed.g2.bx.psu.edu/repos/iuc/datamash_transpose/datamash_transpose/1.1.0+galaxy2 with the following parameters:

QuestionNow let’s see how your heatmap looks like, you can zoom-in and out in your Galaxy history.

- Mention three of the common bacterial pathogen genes found in both samples.

- How can the differences in the found VF bacteria pathogen genes between the two samples be interpreted?

- A lot of bacteria pathogen VF gene products identified by the VFDB are common in both samples, three of them are with the following accession number: NP_461810, NP_461809 and NP_459541

- AAG03023 is only found in

Barcode10sample and NP_460360 is only found inBarcode11sampleBoth samples were spiked with the same pathogen species, S. enterica, but not the same strain:

Barcode10_Spike2sample is spiked with S. enterica subsp. enterica strainBarcode11_Spike2bsample is spiked with S. enterica subsp. houtenae strain.This can be the main cause of the big similarities and the few differences of the bacteria pathogen VF gene products found between both of the two samples. Other factors such as the time and location of the sampling may cause other differences. By knowing the metadata of the samples inputted for the workflows in real life we can understand what actually happened. We can have samples with no pathogen found then we start detecting genes from the 7th or 8th sample, then we can identify where and when the pathogen entered the host, and stop the cause of that

Phylogenetic Tree building

Phylogenetic trees are nice ways to track all the bacterial pathogen genes one by one in all samples. So we will have a tree for every found gene, this tree will indicate which contigs the gene is found in all samples and relating these contigs together based on their topology. With such trees we can identify on which location of the sequences from the sample genome the pathogentic gene is found and whether it is at the same or a different location as the other samples. We can also see how many samples have these genes in common and accordingly track our contamination.

For the phylogenetic trees, for each bacteria pathogen gene found in the samples we use ClustalW (Larkin et al. 2007) for Multiple Sequence Alignment (MSA) needed before constructing a phylogenetic tree, for the tree itself we use FASTTREE and Newick Display (Dress et al. 2008) to visualize it.

To get the sequence to align, we need to extract the sequences of the VFs in the contigs:

Hands-on: Extract the sequences of the VFs

- Collapse Collecction Tool: toolshed.g2.bx.psu.edu/repos/nml/collapse_collections/collapse_dataset/5.1.0 with the following parameters:

- param-collection “Collection of files to collapse into single dataset”:

Contigs- “Prepend File name”:

Yes- Collapse Collection Tool: toolshed.g2.bx.psu.edu/repos/nml/collapse_collections/collapse_dataset/5.1.0 with the following parameters:

- param-collection “Collection of files to collapse into single dataset”:

VFs- “Keep one header line”:

Yes- “Prepend File name”:

Yes- Remove beginning Tool: Remove beginning1 with the following parameters:

- param-file “from”: output of 2nd Collapse Collection tool

- Split by group Tool: toolshed.g2.bx.psu.edu/repos/bgruening/split_file_on_column/tp_split_on_column/0.6 with the following parameters:

- param-file “File to split”: output of Remove beginning tool

- “on column”:

Column: 13- “Include header in splits?”:

Yes- Filter sequences by ID Tool: toolshed.g2.bx.psu.edu/repos/peterjc/seq_filter_by_id/seq_filter_by_id/0.2.7 with the following parameters:

- param-file “Sequence file to be filtered”: output of 1st Collapse Collection tool

- “Filter using the ID list from”:

tabular file

- param-collection “Tabular file containing sequence identifiers”: output of Split by group tool

- “Column(s) containing sequence identifiers”:

Column: 2- “Output positive matches, negative matches, or both?”:

Just positive matches (ID on list), as a single file

We can now run multiple sequence alignment, build the trees for each VF and display them.

Hands-on: Phylogenetic Tree building

- ClustalW Tool: toolshed.g2.bx.psu.edu/repos/devteam/clustalw/clustalw/2.1+galaxy1 with the following parameters:

- param-collection “FASTA file”: output of Filter sequences by ID tool

- “Data type”:

DNA nucleotide sequences- “Output alignment format”:

FASTA format- “Output complete alignment (or specify part to output)”:

Complete alignment- FASTTREE Tool: toolshed.g2.bx.psu.edu/repos/iuc/fasttree/fasttree/2.1.10+galaxy1 with the following parameters:

- “Aligned sequences file (FASTA or Phylip format)”:

fasta

- param-collection “FASTA file”: output of ClustalW tool

- “Set starting tree(s)”:

No starting trees- “Protein or nucleotide alignment”:

Nucleotide- Newick Display Tool: toolshed.g2.bx.psu.edu/repos/iuc/newick_utils/newick_display/1.6+galaxy1 with the following parameters:

- param-file “Newick file”: output of FASTTREE tool

- “Branch support”:

Hide branch support- “Branch length”:

Hide branch length- “Image width”:

2000

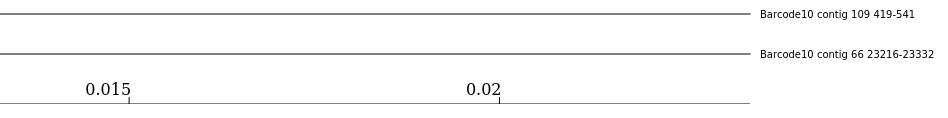

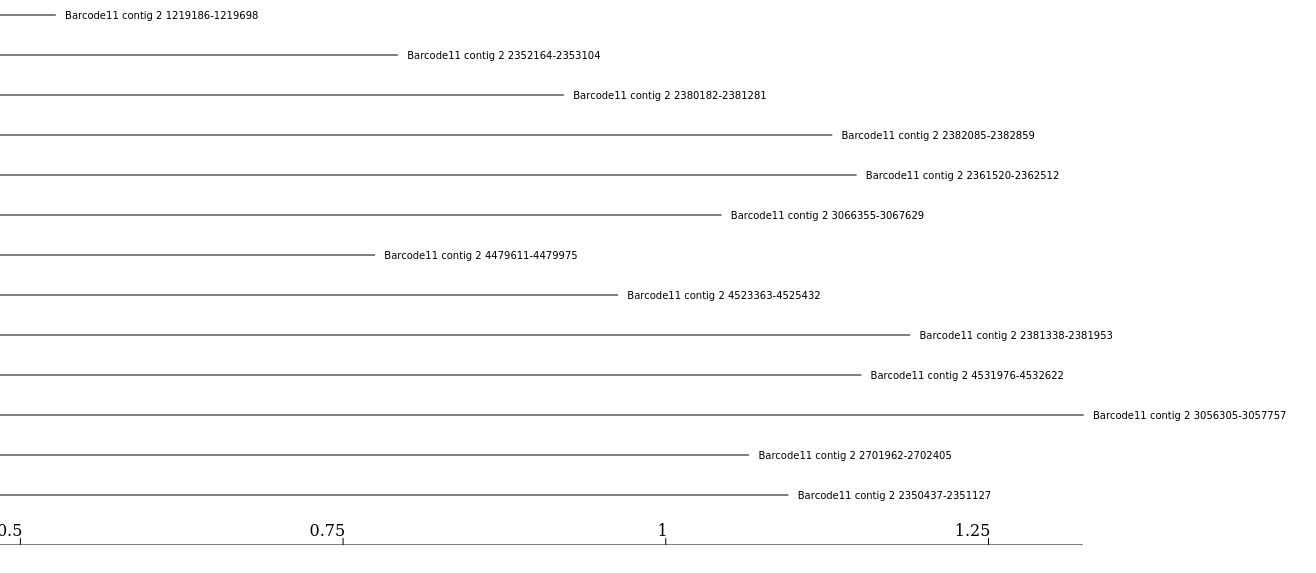

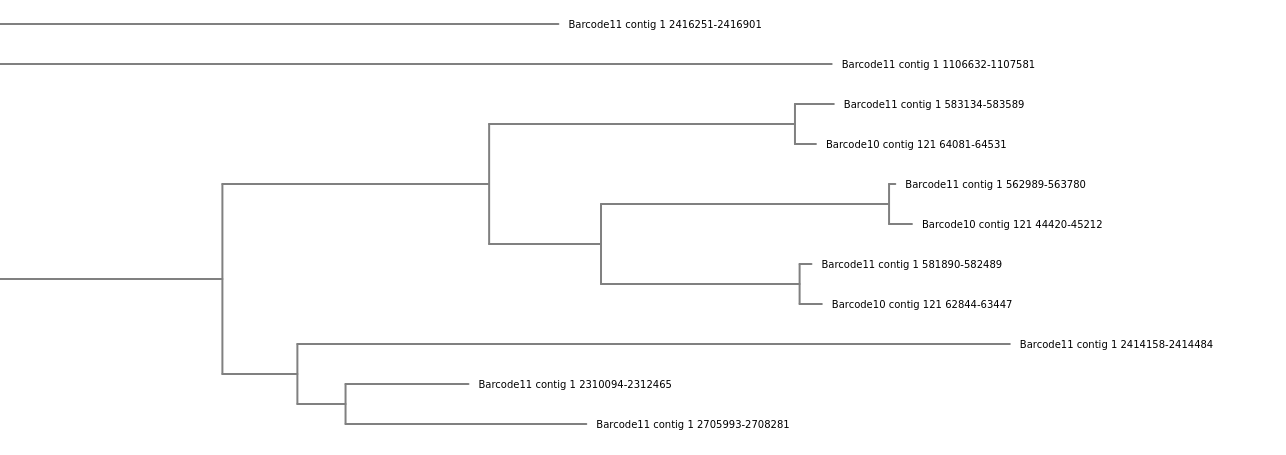

QuestionNow let’s see how your trees for the bacteria pathogen gene with accession IDs: AAF98391, NP_249099 and NP_460111 look like. To access that go to the output of Newick

- In which samples and contigs is gene BAA94855 found?

- In which samples and contigs is gene NP_249099 found?

- In which samples and contigs is gene NP_461819 found?

In the

Barcode10sample only: Contigs 109 and 66

In the

Barcode11sample only: Contig 2

In both samples; Contig 121 of

Barcode10and Contig 1 ofBarcode11

Conclusion

In this tutorial, we have tried the workflow designed to detect and track pathogens in our food and drinks. Through out the full workflow we used our Nanopore sequenced datasets from Biolytix and analyzed it, found the pathogens and tracked it. This approach can be summarized with the following scheme: